tl;dr:

If you are a Debian user who knows git, don t work with Debian source packages. Don t use

apt source, or

dpkg-source. Instead, use

dgit and work in git.

Also, don t use: VCS links on official Debian web pages,

debcheckout, or Debian s (semi-)official gitlab, Salsa. These are suitable for Debian experts only; for most people they

can be beartraps. Instead, use

dgit.

>

Struggling with Debian source packages?

A friend of mine recently asked for help on IRC. They re an experienced Debian administrator and user, and were trying to: make a change to a Debian package; build and install and run binary packages from it; and record that change for their future self, and their colleagues. They ended up trying to comprehend quilt.

quilt is an ancient utility for managing sets of source code patches, from well before the era of modern version control. It has many strange behaviours and footguns. Debian s ancient and obsolete tarballs-and-patches

source package format (which I designed the initial version of in 1993) nowadays uses quilt, at least for most packages.

You don t want to deal with any of this nonsense. You don t want to learn quilt, and suffer its misbehaviours. You don t want to learn about Debian source packages and wrestle dpkg-source.

Happily, you don t need to.

Just use dgit

One of dgit s main objectives is to minimise the amount of Debian craziness you need to learn. dgit aims to empower you to make changes to the software you re running, conveniently and with a minimum of fuss.

You can use dgit to get the source code to a Debian package, as a git tree, with

dgit clone (and

dgit fetch). The git tree can be made into a binary package directly.

The only things you really need to know are:

- By default dgit fetches from Debian unstable, the main work-in-progress branch. You may want something like

dgit clone PACKAGE bookworm,-security (yes, with a comma).

- You probably want to edit

debian/changelog to make your packages have a different version number.

- To build binaries, run

dpkg-buildpackage -uc -b.

- Debian package builds are often disastrously messsy: builds might modify source files; and the official

debian/rules clean can be inadequate, or crazy. Always commit before building, and use git clean and git reset --hard instead of running clean rules from the package.

Don t try to make a Debian source package. (Don t read the

dpkg-source manual!) Instead, to preserve and share your work, use the git branch.

dgit pull or

dgit fetch can be used to get updates.

There is a more comprehensive tutorial, with example runes, in the

dgit-user(7) manpage. (There is of course

complete reference documentation, but you don t need to bother reading it.)

Objections

But I don t want to learn yet another tool

One of dgit s main goals is to save people from learning things you don t need to. It aims to be straightforward, convenient, and (so far as Debian permits) unsurprising.

So: don t

learn dgit. Just run it and it will be fine :-).

Shouldn t I be using official Debian git repos?

Absolutely not.

Unless you are a Debian expert, these can be terrible beartraps. One possible outcome is that you might build an apparently working program

but without the security patches. Yikes!

I discussed this in more detail in 2021 in

another blog post plugging dgit.

Gosh, is Debian really this bad?

Yes. On behalf of the Debian Project, I apologise.

Debian is a very conservative institution. Change usually comes very slowly. (And when rapid or radical change has been forced through, the results haven t always been pretty, either technically or socially.)

Sadly this means that sometimes much needed change can take a very long time, if it happens at all. But this tendency also provides the stability and reliability that people have come to rely on Debian for.

I m a Debian maintainer. You tell me dgit is something totally different!

dgit is, in fact, a general bidirectional gateway between the Debian archive and git.

So yes, dgit is also a tool for Debian uploaders. You should use it to do your uploads, whenever you can. It s more convenient and more reliable than

git-buildpackage and

dput runes, and produces better output for users. You too can start to forget how to deal with source packages!

A full treatment of this is beyond the scope of this blog post.

comments

I ended 2022 with a musical retrospective and very much enjoyed writing

that blog post. As such, I have decided to do the same for 2023! From now on,

this will probably be an annual thing :)

Albums

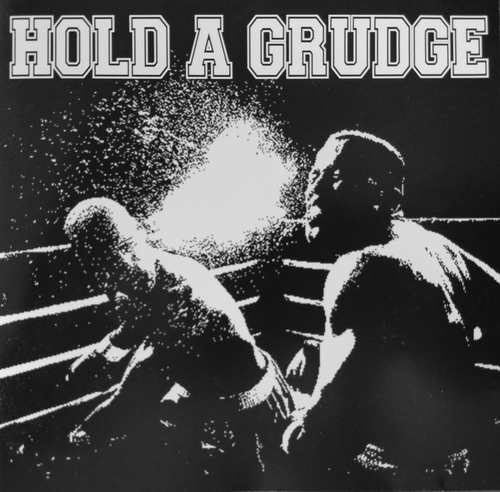

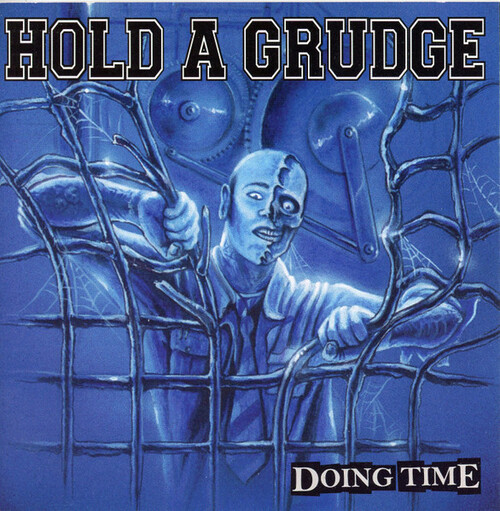

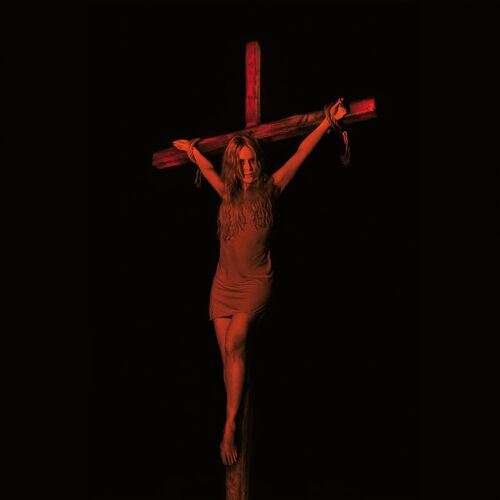

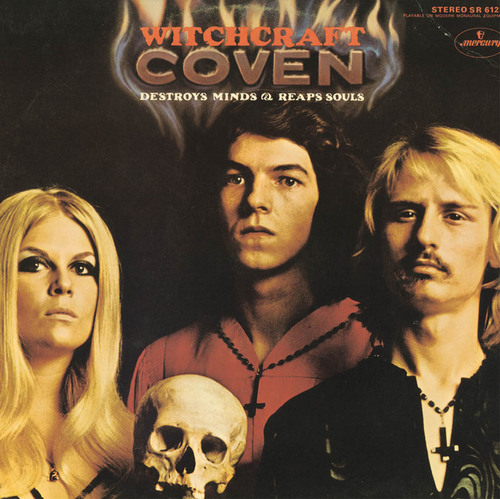

In 2023, I added 73 new albums to my collection nearly 2 albums every three

weeks! I listed them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available.

Once again this year, it seems that Punk (mostly O !) and Metal dominate my

list, mostly fueled by Angry Metal Guy and the amazing Montr al

Skinhead/Punk concert scene.

Concerts

A trend I started in 2022 was to go to as many concerts of artists I like as

possible. I'm happy to report I went to around 80% more concerts in 2023 than

in 2022! Looking back at my list, April was quite a busy month...

Here are the concerts I went to in 2023:

I ended 2022 with a musical retrospective and very much enjoyed writing

that blog post. As such, I have decided to do the same for 2023! From now on,

this will probably be an annual thing :)

Albums

In 2023, I added 73 new albums to my collection nearly 2 albums every three

weeks! I listed them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available.

Once again this year, it seems that Punk (mostly O !) and Metal dominate my

list, mostly fueled by Angry Metal Guy and the amazing Montr al

Skinhead/Punk concert scene.

Concerts

A trend I started in 2022 was to go to as many concerts of artists I like as

possible. I'm happy to report I went to around 80% more concerts in 2023 than

in 2022! Looking back at my list, April was quite a busy month...

Here are the concerts I went to in 2023:

By the influencers on the famous proprietary video platform

By the influencers on the famous proprietary video platform Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a  I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

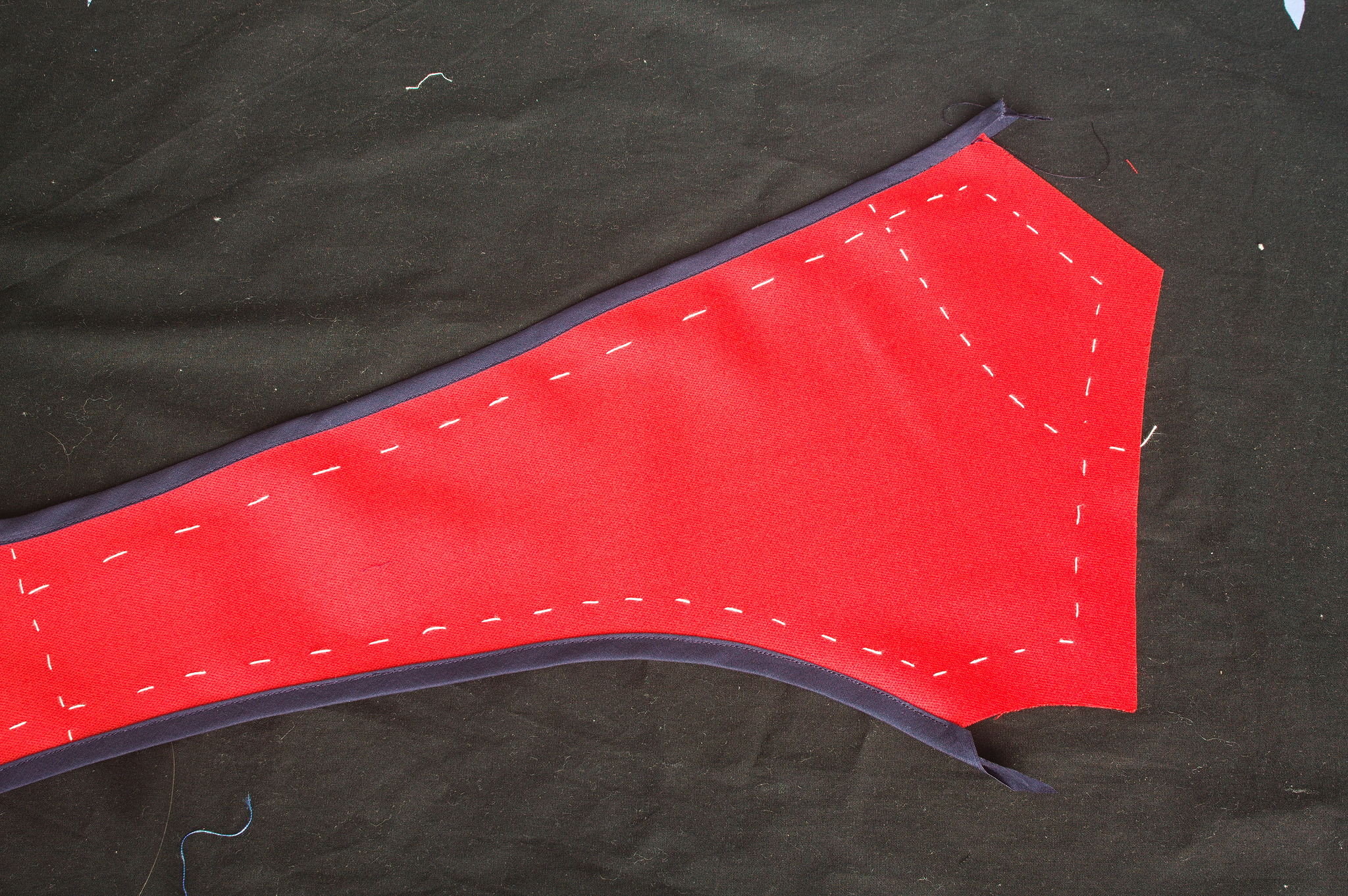

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

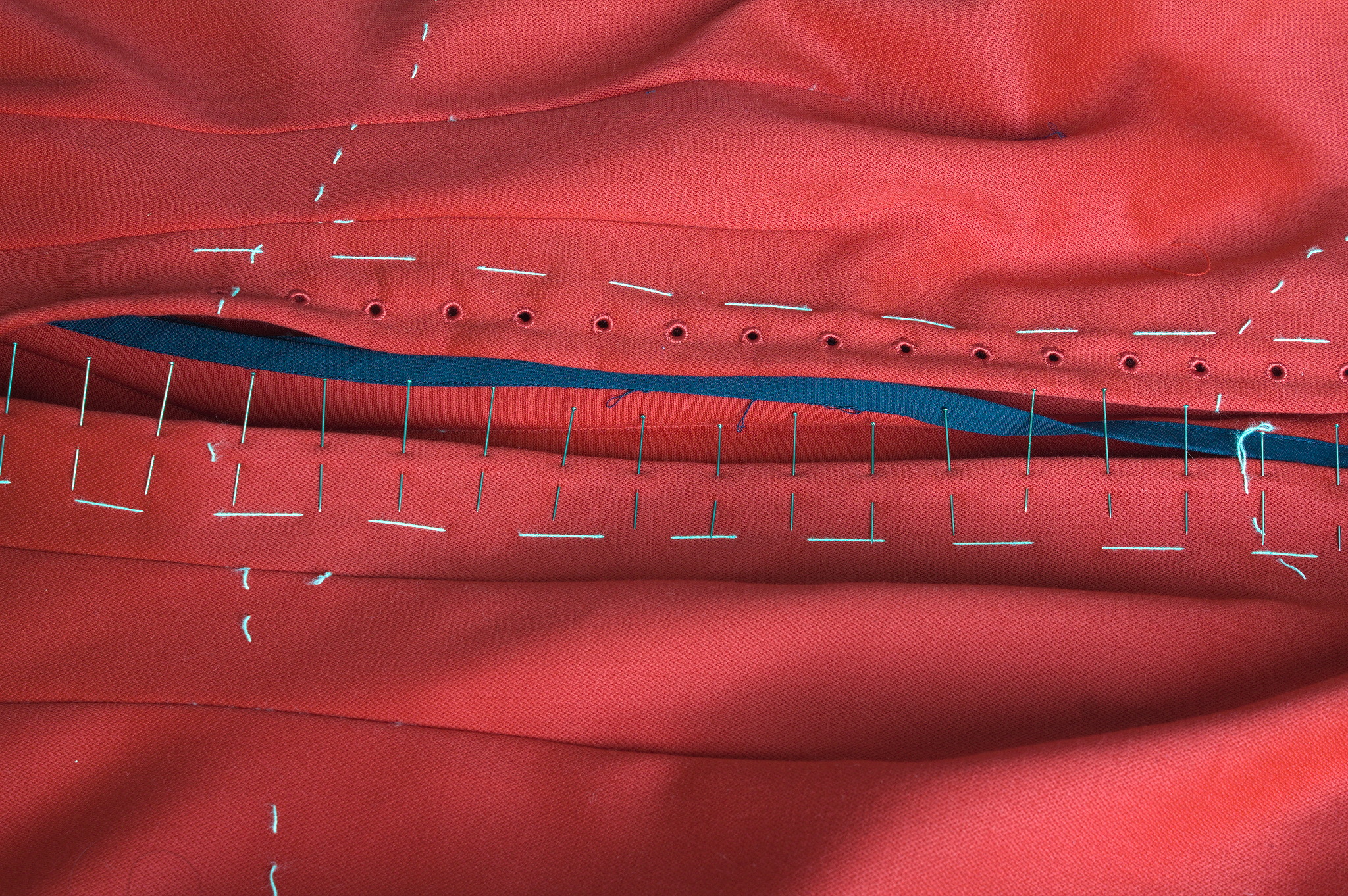

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

Read all parts of the series

Read all parts of the series

And here s what he explains about his drawing:

And here s what he explains about his drawing:

In their drawing we see a Youtube prank channel on a screen, an external

trackpad on the right (likely it s not a touch screen), and headphones.

Notice how there is no keyboard, or maybe it s folded away.

If you could ask a nice and friendly dragon anything you d like to

learn about the internet, what would it be?

In their drawing we see a Youtube prank channel on a screen, an external

trackpad on the right (likely it s not a touch screen), and headphones.

Notice how there is no keyboard, or maybe it s folded away.

If you could ask a nice and friendly dragon anything you d like to

learn about the internet, what would it be?

In her drawing, we see again Google - it s clearly everywhere - and also

the interfaces for calling and texting someone.

To explain what the internet is, besides the fact that one

can use it for calling and listening to music, she says:

In her drawing, we see again Google - it s clearly everywhere - and also

the interfaces for calling and texting someone.

To explain what the internet is, besides the fact that one

can use it for calling and listening to music, she says:

When I asked if he knew what actually happens between the device and a

website he visits, he put forth the hypothesis of the existence of some

kind of

When I asked if he knew what actually happens between the device and a

website he visits, he put forth the hypothesis of the existence of some

kind of

Debian Public Statement about the EU Cyber Resilience Act and the Product Liability Directive

The European Union is currently preparing a regulation "on horizontal

cybersecurity requirements for products with digital elements" known as the

Cyber Resilience Act (CRA). It is currently in the final "trilogue" phase of

the legislative process. The act includes a set of essential cybersecurity and

vulnerability handling requirements for manufacturers. It will require products

to be accompanied by information and instructions to the user. Manufacturers

will need to perform risk assessments and produce technical documentation and,

for critical components, have third-party audits conducted. Discovered security

issues will have to be reported to European authorities within 25 hours (1).

The CRA will be followed up by the Product Liability Directive (PLD) which will

introduce compulsory liability for software.

While a lot of these regulations seem reasonable, the Debian project believes

that there are grave problems for Free Software projects attached to them.

Therefore, the Debian project issues the following statement:

Debian Public Statement about the EU Cyber Resilience Act and the Product Liability Directive

The European Union is currently preparing a regulation "on horizontal

cybersecurity requirements for products with digital elements" known as the

Cyber Resilience Act (CRA). It is currently in the final "trilogue" phase of

the legislative process. The act includes a set of essential cybersecurity and

vulnerability handling requirements for manufacturers. It will require products

to be accompanied by information and instructions to the user. Manufacturers

will need to perform risk assessments and produce technical documentation and,

for critical components, have third-party audits conducted. Discovered security

issues will have to be reported to European authorities within 25 hours (1).

The CRA will be followed up by the Product Liability Directive (PLD) which will

introduce compulsory liability for software.

While a lot of these regulations seem reasonable, the Debian project believes

that there are grave problems for Free Software projects attached to them.

Therefore, the Debian project issues the following statement:

Over roughly the last year and a half I have been participating as a reviewer in

ACM s

Over roughly the last year and a half I have been participating as a reviewer in

ACM s

Nextcloud is a popular self-hosted solution for file sync and share as well as cloud apps such as document editing, chat and talk, calendar, photo gallery etc. This guide will walk you through setting up Nextcloud AIO using Docker Compose. This blog post would not be possible without immense help from Sahil Dhiman a.k.a.

Nextcloud is a popular self-hosted solution for file sync and share as well as cloud apps such as document editing, chat and talk, calendar, photo gallery etc. This guide will walk you through setting up Nextcloud AIO using Docker Compose. This blog post would not be possible without immense help from Sahil Dhiman a.k.a.

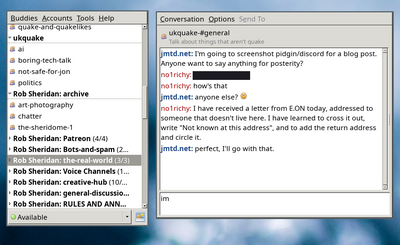

For reasons I won't go into right now, I've spent some of this year

working on a refurbished Lenovo Thinkpad Yoga 260. Despite it being

relatively underpowered, I love almost everything about it.

Unfortunately the model I bought has 8G RAM which turned out to be

more limiting than I thought it would be. You can do incredible

things with 8G of RAM: incredible, wondrous things. And most of

my work, whether that's wrangling containers, hacking on OpenJDK, or

complex Haskell projects, are manageable.

Where it falls down is driving the modern scourge: Electron, and by

proxy, lots of modern IM tools: Slack (urgh), Discord (where one of

my main IRC social communities moved to), WhatsApp Web

For reasons I won't go into right now, I've spent some of this year

working on a refurbished Lenovo Thinkpad Yoga 260. Despite it being

relatively underpowered, I love almost everything about it.

Unfortunately the model I bought has 8G RAM which turned out to be

more limiting than I thought it would be. You can do incredible

things with 8G of RAM: incredible, wondrous things. And most of

my work, whether that's wrangling containers, hacking on OpenJDK, or

complex Haskell projects, are manageable.

Where it falls down is driving the modern scourge: Electron, and by

proxy, lots of modern IM tools: Slack (urgh), Discord (where one of

my main IRC social communities moved to), WhatsApp Web